intro

Duplicate records within a database management system are an issue of concern to every DBA. Read this blog and understand how to work with them!

If you’re a frequent reader of this blog, you will already know that here we explore all kinds of topics: from inner workings of MySQL to data migration. Recently we’ve explored duplicate indexes – but we haven’t yet touched upon its brother, duplicate records. Excited? So are we!

Why are Duplicate Records an Issue?

We will start off from saying that duplicate records are not necessarily bad – many databases contain duplicates of certain rows, and that’s not always an issue of concern. This very quickly turns into a problem, however, when we have a lot of data and run heavy maintenance operations without too big of an insight into what’s backing our database in the first place. (Remember how we told you that one type of a query – ALTER – makes a copy of the table on the disk?)

How to Get Rid of Duplicate Records?

There are two main ways to get rid of duplicate records within a DBMS:

Whichever way you choose, keep in mind that there are database tips & tricks that can help you.

Removing Duplicate Records

Before Importing Data

If you want to cleanse your data before importing, please employ a sort command with the -u (unique) flag:

1

sort -u data.txt > uniq.txt

The trick here is that native Linux commands (such as the one shown above) would be significantly faster than SQL. If you have a lot of data, first employ a sort command in a unique fashion (see what we did there?), then import the uniquely sorted data (without duplicates) to your database.

After Importing Data

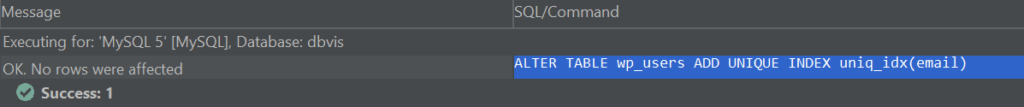

If your data is already imported to your database management system of choice and you need to remove duplicates, simply add a unique index with an IGNORE flag in it. An ordinary ALTER TABLE syntax would look like so:

1

ALTER TABLE [your_table] ADD UNIQUE INDEX idx_name(column_name);

Before the ADD clause, add IGNORE to turn the query into this:

1

ALTER TABLE [your_table] IGNORE ADD UNIQUE INDEX

2

idx_name(column_name);

Then remember – all ALTER TABLE queries make a copy of the table on the disk, copy your data over to that table, then swap tables, so be patient if you have a lot of data.

Woohoo – you got rid of duplicate records! Was it that hard?

DbVisualizer and Database Issues

If you’re reading this blog, chances are that you’ve got some duplicate record issues. And if you have duplicate record issues, chances are that you may have other kinds of database issues too. That’s where DbVisualizer comes into the scene – it’s a powerful, top-rated SQL client used by many software companies across the globe. Built for navigating complexity and supporting pretty much any DBMS you can think of, it will solve your database problems in an instant. Why not give it a try today?

Frequently Asked Questions

Does Record Count Have a Huge Impact on Deleting Duplicates?

When working directly with the database (running ALTER TABLE queries), yes. In other cases, not so much.

What Does the IGNORE Clause Do?

The IGNORE clause within your SQL query ignores any and all errors that may arise when it’s running.

Why Should I Use DbVisualizer?

Use DbVisualizer if you want a reliable, DBA-like SQL client to help you solve your database availability, performance, and security issues.

Looking for an interesting YoutTube channel that covers database management?

If you want to learn more about database availability, capacity, security, or performance, consider exploring the Database Dive YouTube channel. The channel is run by one of our experts and is updated daily, so we’re sure that you will find ways to solve some of the most pressing database issues there too!