intro

Let’s learn what native tools PostgreSQL has to offer when it comes to performing full text search queries.

Terabytes and terabytes of textual data are generated daily. Here is why searching capabilities have become so paramount. Developers will know that there are multiple searching capabilities and that full-text searching is one of them. Full-text searching allows searching search with “fuzzy matching” and is supported by most database management systems, including PostgreSQL. As a versatile tool, full text search is not merely about finding exact matches but also takes into consideration the linguistic context and nuances.

In this complete tutorial, you will dig into the PostgreSQL full text search feature, understanding what it is, how it works, and unraveling its mechanics, features, and optimization techniques.

Become a master of text searches!

What Is Full Text Search in PostgreSQL?

In PostgreSQL, full text search refers to a technique used to efficiently search and retrieve relevant information from textual data stored in a database. This powerful functionality goes beyond simple pattern matching, enabling advanced queries that take into account linguistic and contextual factors. To accomplish such a complex task, PostgreSQL must perform some preprocessing and optimization to improve the accuracy and relevance of the search.

The PostgreSQL full text search feature supports multiple languages to cover as many linguistic nuances as possible. This is invaluable for applications requiring efficient and contextually relevant searches, such as in autocomplete operations, content tagging, and multi-field searches.

How Does PostgreSQL Full Text Search Work?

Here is the list of operations that PostgreSQL executes behind the scenes to perform a full text search:

As you can see, full text search in PostgreSQL involves several key concepts. These include tokenization, stemming, ranking, and more. In detail, the key elements to be kept in mind are:

PostgreSQL Full Text Search Functions and Operators

PostgreSQL provides a rich set of functions and operators for working with full text search. Each of these elements plays a distinct role in constructing, executing, and optimizing search queries. Assume you have two products with their descriptions stored in a products table.

Product 1 has the following text description:

Experience comfort every day with this versatile cotton t-shirt. Crafted from soft, breathable fabric, this shirt ensures a cozy fit throughout your daily activities. Its lightweight design makes it an ideal choice for everyday wear, offering both style and ease. Whether you're lounging at home or heading out, this comfortable t-shirt delivers a relaxed yet stylish look that effortlessly complements any casual outfit. Enjoy the perfect blend of comfort and practicality with this essential wardrobe piece.

While product 2 is described as:

These timeless sneakers are a great wear, perfectly suited for both athletic pursuits and relaxed, everyday outfits. With a design that seamlessly combines style and comfort, these classic sneakers ensure a comfortable fit during sports activities or casual strolls. Crafted for sports, these shoes offer a lightweight and breathable experience, making them suitable for various occasions. Whether you're hitting the gym or enjoying a leisurely outing, these sneakers deliver a blend of practicality and fashion. From sports to casual wear, these shoes effortlessly match your active lifestyle and personal style.

To better understand how these functions and operators work, let's apply them to the above text.

to_tsvector(): Convert a Document to a Text Search Data Structure

The to_tsvector() function converts text into the tsvector data type, which is optimized for text search. In particular, it applies tokenization, stemming, and dictionary rules to the input text.

to_tsvector() accepts two parameters:

Example:

1

SELECT

2

"id",

3

to_tsvector('english', "description") AS ts

4

FROM

5

"products";

As you can see, the description of product 1 becomes:

'activ':26 'blend':72 'breathabl':15 'casual':67 'choic':34 'comfort':2,53,74 'complement':65 'cotton':8 'cozi':21 'craft':12 'daili':25 'day':4 'deliv':57 'design':29 'eas':42 'effortless':64 'enjoy':69 'ensur':19 'essenti':79 'everi':3 'everyday':36 'experi':1 'fabric':16 'fit':22 'head':50 'home':48 'ideal':33 'lightweight':28 'look':62 'loung':46 'make':30 'offer':38 'outfit':68 'perfect':71 'piec':81 'practic':76 're':45 'relax':59 'shirt':11,18,56 'soft':14 'style':40 'stylish':61 't-shirt':9,54 'throughout':23 'versatil':7 'wardrob':80 'wear':37 'whether':43 'yet':60

Every word has been normalized as a lexeme in English. For instance, “perfectly” is now “perfect.”

Note that the input document can also be composed of several concatenated columns:

1

SELECT

2

"id",

3

to_tsvector('english', "name" || ' ' || "description") AS ts

4

FROM

5

"products";

In PostgreSQL, || is the text-concatenate operator.

For better results in terms of ranking, you can also concatenate several ts_vector elements:

1

SELECT

2

"id",

3

to_tsvector('english', "name") || ' ' || to_tsvector('english', "description") AS ts

4

FROM

5

"products";

to_tsquery(): Convert Text to a Full Text Search PostgreSQL Query

The to_tsquery() function converts text into a tsquery data type, which represents a search query. Specifically, it processes the input text to generate query terms based on the specified operators.

Example:

1

SELECT to_tsquery('english', 'perfectly') AS ts_query;

This will produce:

'perfect'

As you can notice, perfectly becomes perfect. Note that to_tsquery() only accepts single words or expressions using text search operators. If you want to convert a phrase to ts_query(), you need to use the phraseto_tsquery() function:

1

SELECT phraseto_tsquery('english', 'it perfectly fits') AS ts_query;

@@: Match Operator

The match operator @@ is used to perform a text search within a tsvector column using a tsquery. In detail, it checks if the tsvector matches the terms and conditions specified in tsquery.

Example:

1

SELECT

2

"id",

3

"name",

4

"description"

5

FROM

6

"products"

7

WHERE

8

to_tsvector('english', "description") @@ to_tsquery('english', 'shoe');

Neither product contains the word "shoe" in their descriptions, but product 2 contains “shoes,” whose lexeme is “shoe”. That is why the query selects the second product.

& and <->: And and Proximity Operators for Searching For Phrases

The AND operator & is used within a tsquery to specify that all terms connected by the operator must be present in the search results.

Example:

1

SELECT

2

"id",

3

"name",

4

"description"

5

FROM

6

"products"

7

WHERE

8

to_tsvector('english', "description") @@ to_tsquery('english', 'casual & wear');

This query selects both products as they both contain “casual” and “wear” in their descriptions.

Instead, the proximity operator <-> performs a proximity search, looking for terms at a certain distance from each other. This can be used to find words that are closely related.

Example:

1

SELECT

2

"id",

3

"name",

4

"description"

5

FROM

6

"products"

7

WHERE

8

to_tsvector('english', "description") @@ to_tsquery('english', 'casual <-> wear');

In this case, you get only product 2 as its description contains the sentence “From sports to casual wear.”

ts_rank(): Rank Search Results

The ts_rank() function calculates a ranking for search results based on their relevance to the query. It takes into account factors such as the frequency of matches and their positions.

Example:

1

SELECT

2

"id",

3

"name",

4

"description",

5

ts_rank(to_tsvector('english', "description"), to_tsquery('english', 'casual & wear')) AS rank

6

FROM

7

"products";

Use the setweight() function to assign different weights to different parts of the tsvector document as explained in the docs to influence the ranking of search results.

Other Full Text Search Functions and Operators

Here are some additional functions and operators you should know:

Complete Example: From Zero to Hero

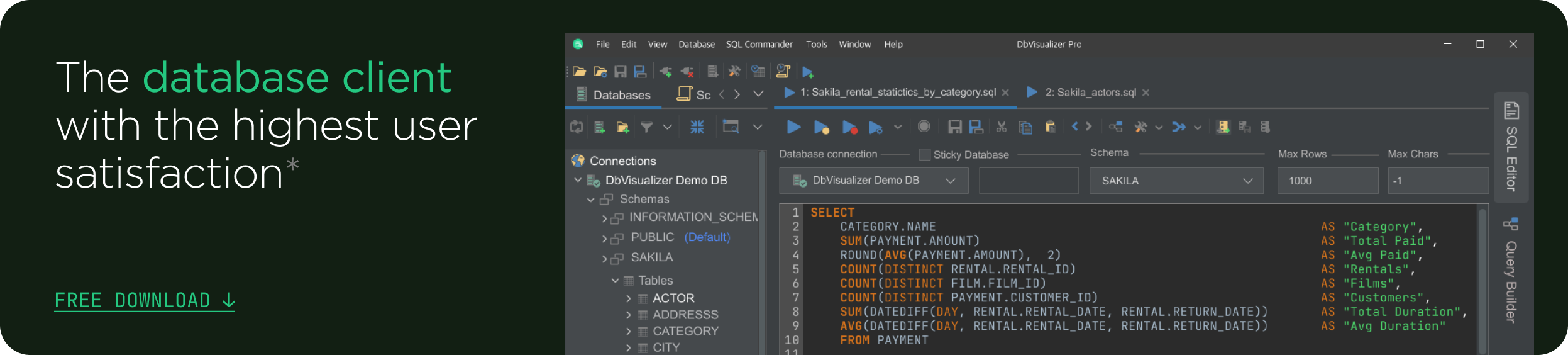

Let’s now consider a step-by-step example to understand how to prepare a table for full text search queries in PostgreSQL. DbVisualizer is the database client chosen to support this section of the guide.

1. Add a ts_vector Generated Column With All Text Fields of Interest

Suppose you have the following table:

1

CREATE TABLE

2

products

3

(

4

id INTEGER DEFAULT nextval('product_id_seq1'::regclass) NOT NULL,

5

NAME CHARACTER VARYING(255) NOT NULL,

6

price NUMERIC(10,2) NOT NULL,

7

description TEXT,

8

tagline TEXT,

9

CONSTRAINT product_pkey1 PRIMARY KEY (id)

10

);

Given the table definition, you are probably interested in running full-text search queries on the name, description, and tagline columns. You could write a query involving the statement below all the time:

1

to_tsvector('english', "name") || ' ' || to_tsvector('english', "description") || ' ' || to_tsvector('english', "tagline")

But this introduces overheads, as PostgreSQL has to generate the field on the fly for each row. To avoid that and make your queries faster, you can define a stored generated column of tsvector type as follows:

1

ALTER TABLE

2

"products" ADD COLUMN "ts" tsvector GENERATED ALWAYS AS (to_tsvector('english', "name") || ' '

3

|| to_tsvector('english', "description") || ' ' || to_tsvector('english', "tagline")) STORED;

Check out our complete guide on generated columns to learn more about what they are on how they work.

2. Define a GIN Index on It

As recommended by the PostgreSQL documentation, GIN is the preferred indexing type for text

search. So, create a GIN index on ts as below:

1

CREATE INDEX "ts_idx" ON "products" USING GIN("ts");

In DbVisualizer, you can visually verify that the index has been created in the right-hand menu:

3. Perform Your Full Text Search Query

You can now write your text search PostgreSQL queries directly on ts:

1

SELECT

2

"id",

3

"name",

4

"description"

5

FROM

6

"products"

7

WHERE

8

"ts" @@ to_tsquery('english', 'sport');

Note that you longer have to transform the text document with to_tsvector() because ts is already of the right type.

Et voilà! You are now a PostgreSQL full text search expert!

Conclusion

In this article, you saw that PostgreSQL's full text search enhances search accuracy through stemming, tokenization, and language-specific considerations. Thanks to what you learned here, you are now equipped with the expertise required to write advanced text search queries.

To handle such a powerful function, you need to rely on an equally powerful database client. This is where DbVisualizer comes into play! In addition to supporting all PostgreSQL search functions and operators, this tool also offers advanced query optimization capabilities in dozens of DBMSs. Take your full-text queries to the next level. Download DbVisualizer for free now!

FAQ

PostgreSQL full text search vs. Elasticsearch: What is the difference?

PostgreSQL full text search and Elasticsearch are both powerful search solutions, but they differ in key aspects. PostgreSQL integrates text search within the database, suitable for applications where text search is an additional feature. Instead, Elasticsearch is a dedicated search engine known for scalability and real-time indexing, ideal for complex search-focused projects with massive datasets. The choice between the two depends on the project's scale, requirements, and emphasis on search capabilities.

Is full text search in PostgreSQL fast?

Yes, full text search is efficient and performs well for many use cases. PostgreSQL uses optimized data structures to achieve great performance and also provides special text indexing capabilities. At the same time, performance depends on factors such as dataset size, indexing strategy, and query complexity. Proper configuration must be considered too.

What are some PostgreSQL full text searching use cases?

PostgreSQL search functionality excels in several advanced use cases, such as autocomplete, complex text searches involving proximity searches and partial word matching, and also empowers content suggestions.

How does PostgreSQL's full text search handle language-specific stemming and tokenization?

The text search PostgreSQL feature employs language-specific stemming and tokenization. Tokenization divides text into tokens, following language-specific rules, while stemming reduces words to their root form to increase the chances of matching. These processes improve search accuracy by taking into account different word forms and linguistic nuances, resulting in more relevant search results.

What are some best practices to follow when using full text search in PostgreSQL?

When doing text searches in PostgreSQL, you can follow these best practices: