intro

Database performance is a crucial part of any application. As databases and the tables within them get larger, though, performance starts to drop – here’s why that happens and how to fix the problem.

Database performance is an issue to every developer an DBA alike – and with larger tables, the issue often gets even worse. Feel free to explore StackOverflow for answers to the questions related to big data and databases – can you even count how many questions users usually come up with? Yeah, we can’t either. Big data is one of the core use cases of both relational and non-relational databases alike: the more data we have, the bigger the issue of managing them properly becomes. So, why does our database performance drop when large tables are in use?

Understanding Big Data

To answer that question, we need to understand big data in and of itself. Big data, as defined by multiple sources, refers to data sets that are so large that usual shared hosting or VPS servers are too weak to deal with. Furthermore, big data sets are often used for data analytics – analyzing big data sets often reveals patterns and trends within them that can be used as part of a blog, business presentation or an investor pitch.

And herein resides the problem as well – as there is more and more data on the web, working with it is a hassle for many. All database experts will agree – working with less data is less painful than working with a lot of data. The reason why is plain and simple – the more data we have, the more problems occur. Problems are not only related to database performance, but they may also cover availability, capacity, and security issues. Glue everything together and you will have an extremely serious problem on your hands – fixing it before it drowns you would be great.

Databases and Big Data

As far as databases and big data is concerned, the first problem lies in the poor choice of a database management system. Many are automatically drawn to non-relational database management systems like MongoDB, but before making a decision, we definitely need to evaluate all of the parameters:

The first question is perhaps the most important – ACID compliance would be great if we want data consistency at the price of slower insert speeds, while BASE compliance would be great if we’d rather sacrifice consistency, but work with JSON-based data at the same time.

Choosing a database management system to base our big data project on is also very crucial – after weighing the first question, though, the path becomes clear: there are only a couple of DBMSes that support ACID or BASE parameters, so we’ll need to choose from them. If we have experience working with the database management system, that’s great – if not, well, we will have to learn.

After we’ve chosen a database management system according to our requirements, it’s time to get to the main question – why is it slow when working with large tables?

Why Are Our Databases Slow?

The most complex questions require the simplest answers – believe it or not, everything’s the same way as far as databases are concerned as well. Our databases are slow for one or more of the following reasons:

Optimizing Our Servers

Before embarking on any kind of a project, all DBAs and developers should turn to their servers to see whether they can even run big data sets the way they require. MySQL will be a great choice for those who need ACID compliance, while MongoDB will be better for those who need Basically Available, but Eventually Consistent (BASE) data-based projects, but servers are important too – do we have so much data that using a dedicated server is necessary or will we make use of a VPS instead?

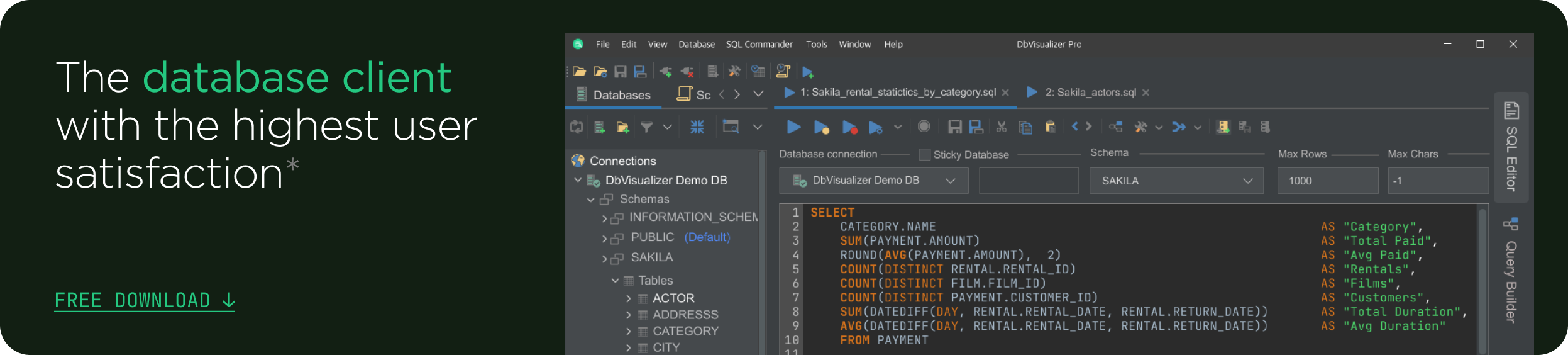

If you are looking for an easy and powerful SQL client and database manager, then you've got to try DbVisualizer. It connects to nearly any database.

It would be great to start from a VPS and switch to a dedicated when the situation requires us to – that way, we save money and don’t waste resources as well.

Choosing a server is only one piece of the puzzle though – after we have chosen a DBMS for our project, it’s time to optimize it as well. Turn to the documentation and read the performance paragraphs thoroughly – in many cases, you will see that the database management system that you’ve elected to use has multiple storage engines that are available for you to choose from. Different storage engines will work differently as well: did we mention that all database storage engines can be optimized in files that allow for the optimization of all of the parameters within databases as well?

These files are as follows:

Get the grip already? Now turn to the documentation once again to decide which storage engine you should use and optimize the necessary parameters for performance, availability, security, and capacity. You may want to do everything in a local environment first as there will surely be some space for errors to occur.

Optimizing Searching for Data

Now that you’ve decided what database management system to employ and optimized all of the necessary parameters, optimize searching for data or you will be heading for trouble as well. Keep the following aspects in mind:

Optimizing Database Management

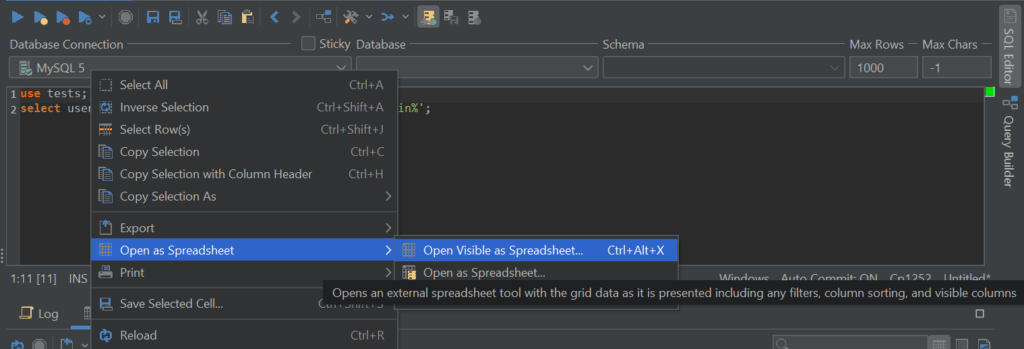

Optimizing servers and queries is great – there’s one more step you need to take to feel at ease though. That step refers to the optimization of database operations with SQL clients or database management tools. One of such tools is DbVisualizer – the tool is being used by top-notch companies across the globe including Uber, Google, Volkswagen, Netflix, Twitter, Tesla, NASA, amongst others, it has millions of users, and it’s built for managing complex database environments meaning that managing database management systems while working with big data sets will be a breeze – here’s how DbVisualizer can help you visualize the data in your databases in the form of a nice graph. Not only that, but DbVisualizer will also help you optimize your databases and do other work as well – did we mention that DbVisualizer users can visualize data through Microsoft Excel as well?

Once all three aspects – servers, searching for data, and database management operations – are optimized, we should remember that we should always keep an eye on our databases with SQL clients and database management software such as the one provided by DbVisualizer – keeping up with the news in the database space is also easier than ever so make sure to do that, evaluate the tool for your use case, and until next time!

FAQs

Why are My Databases Slow?

Your databases are most likely slow because of one or more of the following reasons:

How Do I Optimize Searching for Data?

Consider selecting as few rows as possible, as well as partitioning and indexing your tables where necessary.