intro

Discover the leading real-time data pipeline and Change Data Capture tools leading the way in 2025. In this guide, we explore innovative solutions and their features and tell how organizations are leveraging these technologies for a better tomorrow.

In 2025, the scene of databases is no longer dominated by relational and NoSQL databases. Since real-time data pipelines and CDC tools stepped into the realm a long time ago, things have changed. Today, we are reviewing the best real-time data pipeline and CDC tools in 2025.

What is a Data Pipeline?

To begin with, we have to understand what we’re talking about. In this context, think of a data pipeline as something akin to a data transportation system that moves data from point A, which may be an initial storage facility to our goal, point B. A data pipeline doesn’t only move data, but often also performs some data organizing or even cleaning in the process.

These days, many companies build their own data pipelines to facilitate the easier reaching of goals for their specific use case.

What is a CDC?

A CDC is a term closely related to data pipelines. CDC translates to Change Data Capture and it is by itself a data pipeline that monitors changes to data in a database like PostgreSQL, SQL Server, or MySQL. Then, a CDC process delivers those changes to a data warehouse or a similar endpoint. A CDC will:

A Change Data Capture mechanism allows for easier data replication or the easier completion of ETL processes. Software that facilitates ETL processes replicates database changes to data warehouses using a process called Change Data Capture, or CDC. First, they capture changes in the source database, then they stream the changes in the form of events through a messaging system like Apache Kafka (discussed further in this article), then the data may be transformed and processed, and finally, ingested into a data warehouse or a similar appliance.

The Best Data Pipeline and CDC Tools in 2025

When it comes to the best data pipeline and CDC tools in 2025, we’ll argue that some of the best tools are those that have a reputation from the start. Here are some of our top picks.

Apache Kafka

Apache Kafka is a distributed event streaming platform. This tool is designed to handle big data sets and is one of the primary choices for developers building real-time data pipelines and related applications.

Developers primarily use Apache Kafka to build applications that focus on real-time data streaming and processing. Apache Kafka is used to collect and combine data from multiple sources to facilitate real-time data processing or analytics.

Here’s a graphic demonstration of how Apache Kafka works when processing data (source: Heroku):

To use Apache Kafka, keep in mind that you need to have Java 17 or above running in your system, know your way around Docker containers, and keep in mind that Kafka works based on topics and events. In essence, a “topic” is akin to a table, and an event is akin to records inside of that table. So in essence, you first create a topic, write your data inside of that topic, then visualize everything you’ve created by connecting to an instance where your Apache Kafka instance is installed. Other CDC systems work in a similar fashion. You can read more about Apache Kafka in its documentation.

Pros of Apache Kafka

Pros of Apache Kafka include:

| Pro | Explanation |

|---|---|

| High throughput | Apache Kafka is capable of ingesting millions or even billions of messages per second. |

| Easy scalability | Apache Kafka scales by the user adding more data brokers. Apache Kafka is scaled horizontally. |

| Low latency | Apache Kafka is designed for real-time data analytics, and as such, it offers a minimal delay when performing operations. |

Cons of Apache Kafka

Cons of Apache Kafka include:

| Con | Explanation |

|---|---|

| Deployment | Inexperienced users may find the setup of Apache Kafka to be tedious and time-consuming. |

| Maintenance | Apache Kafka often requires a lot of careful thinking, tuning, and maintenance as well as has a steep learning curve for those unfamiliar with distributed systems. |

| Resources | Apache Kafka can be a rather resource-intensive interesting tool in your arsenal. If you’re using it, make sure you have adequate hardware and network resources to facilitate working with it. The minimum requirements for a Kafka Cluster can be found here. |

Confluent

Confluent can be thought of as an extension of Apache Kafka as it is simply a commercial offering of the tool: it provides enterprise features and a bunch of managed services to help you manage data pipelines.

Developers frequently use Confluent when enhancing the capabilities of Kafka: the tool makes it easier for organizations to build, scale, and operate real-time data pipelines and streaming applications.

Since Confluent develops Apache Kafka, there’s no “drawing” or a specific illustration that can be provided, but Confluent can be perused within a variety of programming languages and use cases. Here’s a basic drawing:

Pros of Confluent

The pros of Confluent are as follows:

| Pro | Explanation |

|---|---|

| Open-source and community-driven software | Confluent is open-source, free, and community-driven. |

| Easy to use | Confluent is known for its user-friendly interface and easy-to-manage data pipelines. |

| Support for many data sources | Confluent supports many data sources and destinations without a need to have add-ons. |

Cons of Confluent

The cons of Confluent are as follows:

| Con | Explanation |

|---|---|

| Relatively new tool | Confluent is a relatively new tool that may cause some users to become suspicious. |

| Limited transformation features | Since Confluent focuses on data ingestion and less on transformation, its transformation features are basic. |

| Lacks enterprise support | Confluent may not be a good choice for enterprises because of its lack of enterprise-wide support. |

Airbyte

Airbyte is an open-source data integration platform that supports CDC and loading data in bulk. Airbyte is known for its extensibility, real-time synchronization, and community support.

To set up Airbyte, first set up Docker, then install a tool that helps you deploy and manage it, then run (start) Airbyte, and start moving your data from sources to destinations (these are akin to topics and events in Apache Kafka.)

Airbyte also has a rather complex dashboard that lets you monitor the deployment process of the tool (source - Airbyte):

Pros of Airbyte

The pros of Airbyte are as follows:

| Pro | Explanation |

|---|---|

| Ease of use | Airbyte is reportedly very easy to use and data pipelines are extremely easy to manage. |

| Support for custom data connectors | Airbyte supports the creation for custom data connectors out of the box. |

| Support for many data sources | Airbyte supports many data sources and destinations without the need for add-ons or additional setups. |

Cons of Airbyte

The cons of Airbyte are as follows:

| Con | Explanation |

|---|---|

| Focus on data ingestion | Airbyte focuses on data ingestion. Features facilitating data transformation are basic. |

| Resource intensive for complex data pipelines | If the data pipeline is complex, many use cases of Airbyte can be rather resource intensive. |

| Deployment | If you aim to self-host Airbyte, doing so may require additional effort. |

Additional Things You Need to Know

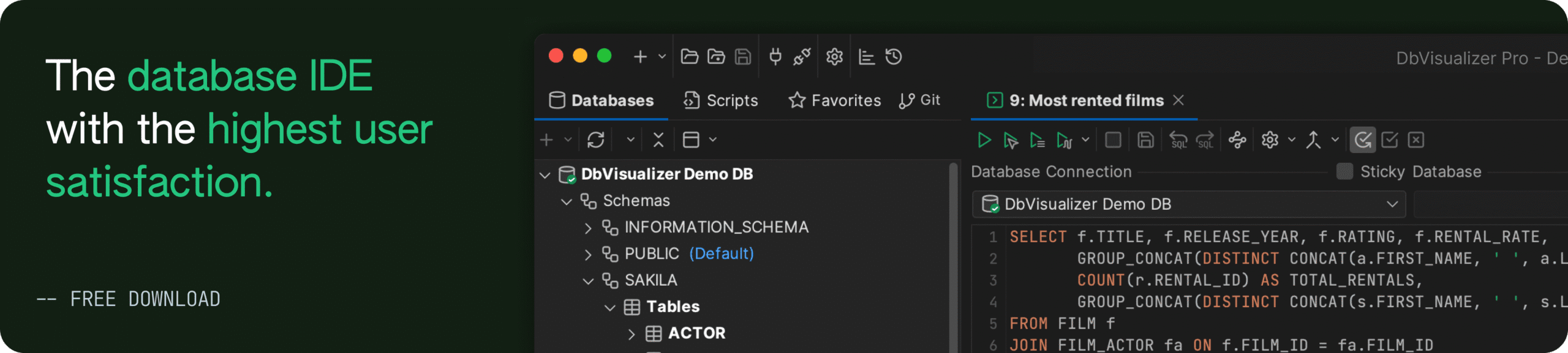

Aside from Apache Kafka, Airbyte, and Confluent, you have many other tools to help you manage your databases and data pipelines and DbVisualizer is one of them. Since DbVisualizer supports more than 50 data sources, you will certainly find the database suitable for your use case in the list, and with its extensive features, DbVisualizer will not only let you query your databases using an extensive SQL editor, but also inspect them to the bone to help you find out what’s faltering within your database instances, why, and when:

While DbVisualizer is not a real-time data pipeline and CDC tool (it is one of the best SQL clients in the world), it is capable of solving a variety of database problems starting from crafting visual database queries, helping you export data sets in a variety of formats, and doing a variety of other things: its secure data access features also mean that no one but you are in control of your data, and even the queries you run within the SQL client are within your control. Give DbVisualizer a shot today!

Summary

The best real-time data pipeline and change data capture (CDC) tools in 2025 include Apache Kafka, Confluent, and Airbyte. Many data engineers will be familiar with those as they’ve been around for a long time and as such, they’re not necessarily news to a lot of people. At the same time, those CDC and data pipeline tools have come a long way since their inception and since they’re so good at producing results for developers, many developers elect to continue using them to this day.

To decide which real-time data pipeline tool is the best fit for your use case, weigh their pros and cons: if you’re building high-throughput real-time data applications, use Apache Kafka. Use Confluent if you prefer commercial support and/or have complex Kafka deployments, and have a look at Airbyte if you need to extract, load and sync data into warehouses from various sources.

These things should help you decide, but again, since all tools have their pros and cons, it’s hard to say which real-time data pipeline tool is the exact fit for your use case: to decide what to use, weigh their pros and cons, and decide for yourself. Whatever you choose, though, we’re confident that neither of the tools in the list will let you down.

And if real-time data pipelines and CDC don’t satisfy you or you’re looking for an SQL client, we’ll suggest DbVisualizer: import databases into the tool and leave all of your issues for DbVisualizer to fix. And if you have some more of them, head over to TheTable and let our engineers tell you how you should go around fixing them.

FAQ

What is a Data Pipeline?

In the database world, a data pipeline refers to a multi-step process that helps you move data from a place where it’s stored to a place where the data is analyzed. A data pipeline does not only help you do that but often also performs data organizing and/or cleaning in the process.

What are the Best Real-time Data Pipeline and CDC Tools in 2025?

There are many real-time data pipeline and CDC tools, however, DbVisualizer suggests taking a look into Apache Kafka, Confluent, or Airbyte. One of those three should solve your issues.

Why Would I Use DbVisualizer?

Consider using DbVisualizer if you are working with one or more of the databases DbVisualizer supports — if you’re facing issues related to database performance or structure, DbVisualizer is a great tool to help you keep an eye on them. Perhaps your databases are performant and you just need an SQL client to assist you in your journey? DbVisualizer is equipped to help you achieve all of your goals in that realm too. Give it a try today — the first 21 days are on us.